Tuesday, June 27, 2017

Friday, June 23, 2017

Odoo 11 : What would be good to have improvements in the Community and Enterprise Edition?

Odoo 11 is planned to be released on October 2017 and will come packed with many features. As per Fabien’s presentation at the Odoo Experience 2016 event the new version, will be focused on the following features

Odoo Community:

- Usability

- Speed

- New Design (from current Odoo Enterprise)

- Mobile

Odoo Enterprise:

- Accounting

- Localizations

- Services Companies

- Odoo Studio (make it even better)

- Reporting & Dashboard (a more BI-like tool)

- Improving on existing Enterprise Apps such as Helpdesk, Timesheets, Subsciptions

- New Appointment Scheduling App

- IOS App (May be)

What Would be good to have in Odoo 11?

The above mentioned items are part of the Roadmap of Odoo. But I am sure you guys also would like to voice your opinion and mention some of the things you would like to see as part of this release. Feel free to leave out your comments, suggestions and updates.Friday, June 16, 2017

Odoo Wordpress WooCommerce Connector

We had a customer who was using Wordpress and wanted to integrate their commerce with Odoo. We configured the WooCommerce plugin along with connector to Odoo. WooCommerce is business E-commerce tool which is basically used to provide platform for buying and selling anything. Woocommerce gives complete store owner and developer control.

So we are using here odoo as backend engine for all business operations and at woo-coommerce having your online store then it will be very essential to integrate woocommerce store with odoo. Pragmatic offer connector module for Woo-commerce to manage and automates your vital business process and saves your time by instantly entering items and inventory from your Odoo instance into WooCommerce and automatically importing WooCommerce orders and customer data into Odoo. Here are the steps we followed to get this working.

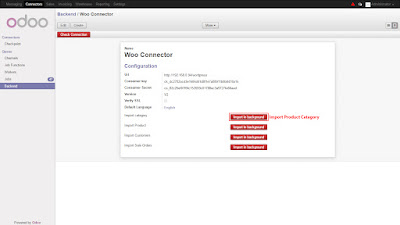

Path: Connectors → Queue → Backend menu.

Here you can select preconfigured WooCommerce Instances from the list or if no any Instances configured, then you can click on link Create more Instance in order to create new one.

Setup & Configure your WooCommerce Instance

After installation of this module, you will able to see the menu for WooCommerce Instance configuration on a following path.Path: Connectors → Queue → Backend menu.

Here you can select preconfigured WooCommerce Instances from the list or if no any Instances configured, then you can click on link Create more Instance in order to create new one.

Following information we have to fill -

- URL

- Connector Key

- Connector Secret

- Version

1.Import Category

Woo-Commerce Product Category list:

Here we are importing the product categories from woocommerce in to Odoo.Odoo Sync Product Category :

Once we click on the Import in background for import category, it will import from woocommerce into Odoo. Below screenshot will show imported categories.2. Import Product

Woo-Commerce Product List :

Here we are importing products from woocommerce into Odoo.Odoo Sync Product list :

Once we click on the Import in background for import product, it will import from woocommerce into Odoo. Below screenshot will show you the imported products.3. Import Customer

Woo-Commerce Customer List :

Here we are importing customers from woocommerce into Odoo.Odoo Sync Customers :

Once we click on the Import in background for import customers, it will import from woocommerce into Odoo. Below screenshot will show you the imported customers.4. Import Orders

Wo-Commerce Orders :

Here we are importing sale orders from woocommerce into Odoo.Odoo Sync Order :

Once we click on the Import in background for import sale orders, it will import from woocommerce into Odoo. Below screenshot will show you the imported sale orders which will be in the draft state.Thursday, June 1, 2017

Business Intelligence Tools Comparison 2017 Pentaho Vs Power BI Vs Tableau Vs QlickView Vs SAP BO Vs MSBI Vs IBM Cognos Vs Domo Vs DUNDAS Vs Microstrategy

There are many BI tools available in the market and it make it very difficult to choose the right option. Some tools are open source and free of cost and others are licensed. Some have ETL capabilities while others are purely Dashboarding and data visualization toolkits. We have compiled a comparison of some of these tools so that you can make an informed decision.

| Parameters | BI - Tools | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Launching Year of Tools | 2004 | 2014 | 2003 | 1993 | 2001 | 1990 | 2005 | 1969 | 2010 | 1992 | 1989 |

| Available Versions | Pentaho Community CE Pentaho Enterprise EE | Power BI - Free Power BI Pro(Trial) | Tableau Public - Free Tableau Desktop (14Days free Trial) Tableau Server (free Trial) Tableau Online(free Trial) | QlikView Qlik Sense | JasperSoft Community JasperSoft Enterprise Edition | SAP - Business Object | BIDS --SSIS , SSAS and SSRS comes as a package with SQL server | IBM Cognos -business Object | DUNDAS BI | ||

| Free Trials | |||||||||||

| Operating System Compatibility | Windows, Mac, Linux | Windows Only | Windows and MAC (restricted) | Windows and MAC | Win/Mac/Linux | Win/Mac/Linux | Win/Mac/Linux | Win/Mac/Linux | Win/Mac/Linux | Win/Mac/Linux | Windows and MAC |

| Open Source | |||||||||||

| ETL Tool | PDI (Penthao Data Integration) | Inbuild - uses SSAS internally | does not require ETL | does not require ETL | Jasper ETL | BODS | SSIS | Inbuild | |||

| Size of Organisations | Any Size | Any Size | Any Size | Any Size | Any Size | Enterprise Business | Any Size | Any Size | Any Size | Enterprise Business | Any Size |

| Features | Pentaho Features includes Data Visualization , Data Warehousing , ETL , Schema Workbench , weka , PRD report Designer, Hadoop Shims, Works really well with Big Data | Power BI is Powred with features like Self Service Analytics, Data Refinement , Data Warehousing, Data Visualization | Free versions of Tableau has limited Data Sources support but paid versions lets you connect to more data sources Dashboards are self service,more interactive ,lots of chart options to plot data and has best data visualization capabilities , Internal help to find the best chart as per data set. Faster Dashboard Delivery and Tableau Online to share Reports. Features like "Show me" is really helpful | Excellent product that leads way in data discovery and visualisation Qlik View has more interactivity ,gives you lots of options to build charts from, Internal help to find the best chart as per data set. Faster Dashboard Delivery | Analysis, Reporting , ETL, Dashboards,Cloud BI Solutions available. | Takes care of All BI vertical full end to end software with wide presence and market Leader in fortune 100 companies | Data Refinement , Data Warehousing, Data Visualization, CAS Sercurity , Active Directory Management,Cube Management | Reporting, Dashboarding, Analysis , Data Integration, Visualization , Self service Data Analysis, Data Warehousing, Real Time data Analysis | after free trial ,DOMO will take care of all the requirements , No development or platform details provided | 45 Days Free Trial , after which DUNDAS will take care of all the requirements , No development or platform details provided. | |

| Category | Free Open Source BI | Free Cloud BI | Proprietary BI Software | Proprietary BI Software | Free Open Source BI | Proprietary BI Software | Proprietary BI Software | Proprietary BI Software | Proprietary BI Software | Proprietary BI Software | Proprietary BI Software |

| Restrictions | Enterprise functionalities missing in Community Edition: Scheduling of Reports, Active directory integrations, Ad Hoc reporting , Analyzer Grids, No Support facility | Data Refresh: Daily,Data Capacity Limit : 1GB free Data Streaming : 10K rows/hour Power BI cannot be installed on AWS Server | Does not works with Unix/Linux , Limited features in free version, Data that you will use for free version will be made public and Development cannot be done on MAC systems | does not works with Unix/Linux Limited features in free version, Data that you will use for free version will be made public | Enterprise functionalities missing :- Ad Hoc Reporting | adding a new data source is really a big job in SAP BO and also you are fully dependent on SAP Team for implementation | Cannot define color of your choice, poor UI Less chart to choose from, need skilled developers to develop the charts. | Data Capacity : 1,00,000 Records or 500 MB not more than 50 columns | |||

| Pros | Free and Open Source, Support for all major data sources , No dependency and gels really well with all cloud systems , good ETL Capabilities, Good Connectivity with hadoop and has Data Mining tool weka | Power BI gives Better Visualization ,Analytics capabilities , Faster Dashboard Delivery, Simple to use and can be adapted by business Users , Self Service BI is something many BI Users need. | A large collection of data connectors and visualizations. Intuitive design, faster and better Analysis, does not require ETL for small scale implementation/ Tableau has pared with informatica for ETL capabilities | Intuitive, easy to deploy, good at joining datasets together | Open source Software with Reporting and Dashboarding Facilities. It provides all the required tool under one umbrella | security and brand name are the biggest pros , company provide trainings and support to its customer,Good for big Enterprises ,Highly Scalable Software. | Highly Secure as the data stays on premises , also strong active directory authentication both at report level and data Level.very well integrates with all Microsoft products like Office and Sql server | Faster and hassle free dashboard delivery without any ETL or Olap Analysis | |||

| Cons | Not Stable, Depends highly on community which is not active, Dashboard Building approach is fairly Technical and need knowledge of SQL, HTML and JScript, Self Service BI and Mobile BI are not a part of Community Edition, development time is more compared to other tools, Limited Charts Components,Pentaho Data Integration is often Slow when dealing with Larger Data | Cannot let's you deal with Larger Data sets on free version, Need to learn DAX Queries to work with the tools, less data Sources to connect to in the free version | Bit of a learning curve , does not gels well with all data sources on free versions but connects to all Data Sources in paid versions. | Bit of Learning curve , does not gels well with all data sources on free versions ,comparatively costly | Self Service BI only available in Enterprise Versions,reports are simple and less interactive | Implementation Cost ,Adding new Data source is very messy ,SAP is not very flexible with UI cannot use it for predictive analysis." Confusing Licensing process, frequent support fee hikes | Less Themes and Designs , vanila Dashboards with less interactivity. | they do not take projects less than $50,000 and less likely to negotiate | |||

| pricing | CE: FREE | FREE(conditions apply) | FREE(conditions apply) | Trial Free | Free | Free Trial | Free Trial | Free Trial | Free Trial | Free Trial | Free Trial |

| EE: Negotiable depend on the components you want for EE visit: www.pentaho.com/contact | Power BI pro : $9.99 /user/hour with 10GB /user ,refreshed hourly with 1M rows/hour | https://www.tableau.com/pricing | $1,350/user and concurrent users are $15,000 | Purchase JasperSoft from www.jaspersoft.com | Purchase SAP BO from www.sap.com/india/product/analytics/bi-platform | Need to purchase the SQL server suite with BI capabilities | $50,000 minimum charge to get started and about $1500 per user after that. | ||||

| Final Analysis/Comments on free versions | Good for companies trying BI for the first time ,Pentaho will help in adapting the whole concept of BI with Zero Cost to Software also Enterprises with internal Technical teams can use Pentaho by training internal team members and enjoying the flexibility of open source pentaho Software. Pentaho is quickly adapting new trends of Analytics which makes it one of the important contender in BI market. | Came as a replacement to Excel by microsoft , for companies who need nearly real time and quick Dashboarding with interactive Visualization. Customers who are ready to pay for subscription charges can get the best in class visualization of Power BI though it needs a knowledge of DAX queries to get the max out of Power BI. | Companies that are already working with Windows and looking for interactive Dashboards and Reports without ETL and without spending much time and money in ETL can use Tableau and can create self service BI, Tableau is a Market Leader in visualization and Supports almost all data sources. Does not uses any DAX which is an added advantage. | Quite similar to Tableau and Power BI but very mature in terms of product as it is one of the oldest Data Visualization and BI Tool. but latest analysis shows it lacks few of the new visualization features that are there in Tableau. but it is as good as Tableau and other BI tools with quite high customer base. launched qlik sense with quite new features in analytics. | Jaspersoft comes with a community edition that almost has all features like pentaho. Mature Player with good presence. but does not have Analytical Features of Predictive Analytics , forecasting which pentaho Has. good for normal enterprise level reporting | A complete Products that takes care of everything. from Reporting to Analytics to Mining and OLAP. Costly in terms of implementation but nothing seems impossible in SAP BO space. qualified team which takes care of everything. | Good for Companies who already uses Microsoft Products and are very very strict in terms of Security of Data. Can compromise with Look and feel but not the Data integrity , Do not need cloud and have self sufficient Infrastructure and In house technical team | Here both the companies Implements everything at their end and there softwares are not available to use freely. after trails you have to pay and get the custom BI. | |||

Odoo POS Product Loading Performance Improvement with AWS Lambda

Odoo POS works well and loads quickly if the number of products are reasonable. But if you are looking to load hundreds of products the Odoo POS can slow down and each session could take more than 15 mins to load first time. In order to solve this performance issue we have used power of the Amazon Cloud to make this loading faster. We use the AWS Lambda web Service along with Node.js. The following describes how we achieved better performance and load times using AWS Lambda

Basic Lambda Function Creation in AWS

- Select the Lambda service from the services listed in AWS service after login in AWS browser console.

- After selecting Lambda service you will redirected to lambda page. Here you can see all the lambda functions created using this aws account.

- Click on the “Create a Lambda function” button. You will be redirected to another page, where you will find the example lambda functions available by-default in it. Select “Blank Function”.

- After selecting “Blank function”, you will be redirected to another page. Click on “Next” button.

- After that you can see the lambda console where you have to write your code. First write the name of the lambda function. And then select the language in which you want to write the lambda function.

- After writing your code you have to configure your lambda function according to your requirement. Let the Handler value be same. Select the Role from dropdown.

- You can also configure the execution time and memory limit to be used for execution. And you can do this in “Advanced setting”. Set it as below:

- You can test your function and check the log in your lambda console.

AWS Lambda Connection to Postgres Database and Retrieving the Product file

Our main purpose to use lambda function is to improve the performance of generating a dump data file from postgres database. I have written aws lambda function in NodeJs language. For this I have used the available postgres library to connect to postgres.

Basically we don’t need any folder or other file to execute simple lambda function. But since we are using external NodeJs library. We have the following folder structure:

- Create one folder in your system. And add keep all your files and module folder inside it.

- Main file is index.js file for which lambda searches whenever we upload a zip folder in lambda console. This is a mandatory file in Lambda function.

- Next is our connection file (connection.js). We have created this separate file for reusability, so that we can access this file from another files wherever we want a db connection.

- Next we have node_modules folder. This folder is created when we use any NodeJs library.

To upload all this in lambda, zip all your custom files, folders and index.js file and upload that zip file in Lambda console.

Below is the main index.js file which lambda treat as a default file to be executed :

var pool = require('./connection');

var AWS = require('aws-sdk');

AWS.config.update({accessKeyId: "yOURaCCESSkEYiD",secretAccessKey: yOURaCCESSsECRETkEY'});

var s3bucket = new AWS.S3({params: {Bucket: 'BucketName'}});

exports.handler = (event, context, callback) => {

pool.connect(function(err, client, done) {

if(err) {

callback('error fetching client from pool' + err, null);

}

client.query('SELECT * FROM product_product ORDER BY id', function(err, result) {

if(err)

{ callback(err, null);

}else {

var params = {

Key: 'sbarro_5.json',

Body: JSON.stringify(result.rows)

};

s3bucket.upload(params, function (err, res) {

if(err)

callback("Error in uploading file on s3 due to " + err, null);

else

callback(null, "File successfully uploaded.");

});

}

client.end(function (err)

{ if (err) callback("Error in uploading file on s3 due to " + err, null);

});

});

});

};

var AWS = require('aws-sdk');

AWS.config.update({accessKeyId: "yOURaCCESSkEYiD",secretAccessKey: yOURaCCESSsECRETkEY'});

var s3bucket = new AWS.S3({params: {Bucket: 'BucketName'}});

exports.handler = (event, context, callback) => {

pool.connect(function(err, client, done) {

if(err) {

callback('error fetching client from pool' + err, null);

}

client.query('SELECT * FROM product_product ORDER BY id', function(err, result) {

if(err)

{ callback(err, null);

}else {

var params = {

Key: 'sbarro_5.json',

Body: JSON.stringify(result.rows)

};

s3bucket.upload(params, function (err, res) {

if(err)

callback("Error in uploading file on s3 due to " + err, null);

else

callback(null, "File successfully uploaded.");

});

}

client.end(function (err)

{ if (err) callback("Error in uploading file on s3 due to " + err, null);

});

});

});

};

In the above code, I have written code to execute our query which we want to run in postgres database and to generate a file after reading data from database.

Code breakdown description is as below:

- In the first line, I have included the connection file which we have written in NodeJs to connect with our postgres db.

- In the second line, I have included aws-sdk. This library is pre-installed in lambda environment. I have used it to use AWS S3 bucket, where we will store our generated file.

- After that, I have provided the S3 bucket credentials to connect.

- “pool.query('SELECT * FROM product_product ORDER BY id', function (err, result) “ is the important function to run a query to fetch data from database.

- After fetching data from database in variable result.rows, I have converted it into Json format and passed data to json file which I have stored in S3 Bucket.

- Using “s3bucket.upload(params, function (err, res)” function to upload the json file in S3bucket.

- Finally, after completing the execution, we use client.end(function (err) {} function to end the PostGres Connection and exit our Lambda function execution.

const pg = require('pg');

var config = {

database: DBname,

user: Username,

password: ‘Password’,

host: 'IP', port: 'XXXX',

idleTimeoutMillis: 1500000,

};

const pool = new pg.Pool(config);

pool.on('error', function (err, client) {

console.error('idle client error', err.message, err.stack);

});

var config = {

database: DBname,

user: Username,

password: ‘Password’,

host: 'IP', port: 'XXXX',

idleTimeoutMillis: 1500000,

};

const pool = new pg.Pool(config);

pool.on('error', function (err, client) {

console.error('idle client error', err.message, err.stack);

});

module.exports.query = function (text, values, callback) {

return pool.query(text, values, callback);

};

module.exports.connect = function (callback) {

return pool.connect(callback);

};

Code breakdown description is as below:

- In first line, I have included the “pg” library to connect postgres from NodeJs.

- In variable config, I have mentioned all the credentials which we need to change for our POS database connection.

- And rest code is to connect with database and it will throw an error if we have any issue in connection.

NOTE: For connecting Postgres in NodeJs, we don’t need to make any changes in Postgres database. We just need the above mentioned credentials to connect it.

HOW TO CALL AWS LAMBDA FUNCTION IN YOUR APPLICATION

We can use lambda function in different application by integrating Lambda function with another AWS microservice API GATEWAY. By integrating it with API gateway, we can use our single lambda function into multiple application where we need same functionality as our written lambda function. To integrate lambda function with API gateway, you can follow the below steps:

- Select the API gateway service from the services listed in AWS.

- After that click on “Create API” button. You will get a page where you have to name your API. After that click on “Create API” button

- Select “Create Method” option from Action dropdown. Then select “Get method” from dropdown in blue color. You will see the below screen.After that Keep “Integration Type” as Lambda Function. Select your Lambda region from dropdown, then you will get option to select your lambda function name. Then Save your Get method.

- After saving GET method, you will see the below screen. After that select the “Deploy API” option from the ACTION dropdown in the top. Once you will click on “Deploy API” option, you will see the popup. Create a deployment stage.

- Once you will deploy your API, you will get the URL through which you can access your lambda function.

HOW TO USE AWS LAMBDA FUNCTION IN ODOO POS APPLICATION

Subscribe to:

Comments (Atom)